Do you want to become a Prompt Engineer, but you’re not sure how to go about it, or even what it really involves?

Is it just using ChatGPT?

Do you need to learn to build AI?

How about Machine learning?

Or learning coding?

Don't worry because in this guide, I'll answer all this and more.

You'll learn what Prompt Engineers actually do, the skills needed for the role, get resources to learn those skills, as well as how to get started in this exciting new career.

Let's get started...

What is a Prompt Engineer and what do they actually do?

In simple terms, a Prompt Engineer works with pre-built AI models to help companies to achieve their goals.

It sounds simple because, in theory, it is!

But just like with any role, by having a deeper understanding of how these AI models work, and understanding the different options and techniques available, a good Prompt Engineer can make the company’s AI initiatives significantly more effective and efficient, and help them to achieve tasks it wouldn’t have the resources or time for normally.

For example

Finance companies are hiring Prompt Engineers to improve how they analyze stocks and investments so they can be more effective and have better data for human analysts.

In the example above, they estimate it will return over $1.5 Billion dollars in value to their bank alone!

So how would they do this?

Well it depends.

In this example, the Prompt Engineer might:

Start by understanding JPMorgan’s goals

Assess their data sources (i.e. what proprietary data they have that the existing AI models would not have been trained on)

Then develop a workflow that involves selecting a pre-built AI model (or models) best suited for the task

Customize the model with different prompting techniques including the System Message, prompt chaining, and transforming user inputs, plus augmenting with additional data

Fine-tuning the model to further specialize it for the task

And finally, the Prompt Engineer will develop benchmarks and run tests to ensure the AI model is effective and efficient, both now and on an on-going basis

Like I say, the process varies on the goal, but what you have to understand is that this role is not about building AI. It’s about helping companies use it properly.

Now I know what you might be thinking:

“ChatGPT and these other tools are easy to use. You just ask it to do something and it does it. Why do we need Prompt Engineers?".

And sure, anyone can ask it to do something, but that doesn’t always mean it is well-suited for your task or will give you the correct result.

This is because it takes specific, specialized skills to make sure you get the correct output each time - which is especially important when dealing with complex tasks or tasks that require a high degree of accuracy.

For example

An AI that’s 95% accurate is probably perfectly fine for most tasks, but not for life-or-death tasks like identifying and diagnosing cancer. So that’s where Prompt Engineers come in. Because they know how to design more advanced AI workflows that guide the model toward useful, accurate, and consistent results. This involves a lot of testing, adjustment, and fine-tuning until it works.

Could this be the job for you?

Well, if you’ve ever taken a vague request and then turned it into something clear, step-by-step, and actionable, then you already have the kind of mindset this role depends on.

Talking of skills, let’s get a big misconception out of the way.

Do you need to learn to code to be a Prompt Engineer?

Well, that depends.

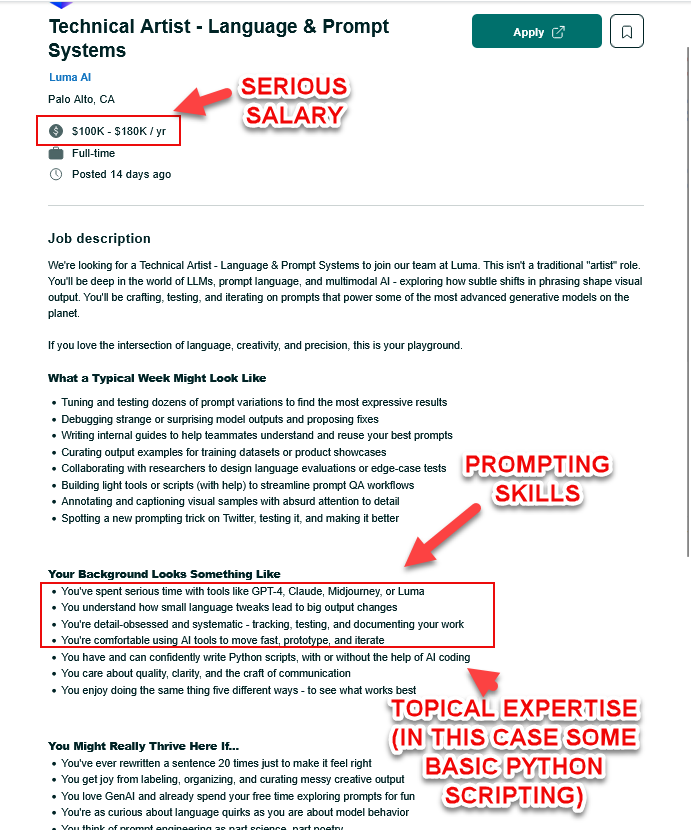

It’s certainly possible to get hired as a Prompt Engineer with no coding background other than the required knowledge and skills around LLMs and prompting.

However, coding skills are going to help significantly when incorporating an AI model into a full workflow. Fortunately, even just basic coding skills can take you a long way in this regard.

Coding isn’t the only skill that’s useful for Prompt Engineers though…

In fact, one of my favorite parts of prompt engineering is that every single person’s unique background and skills allow them to bring a unique and useful perspective to the field.

For example

If a company works with legal, healthcare, or finance data, then having some familiarity with that respective field will help you better craft effective prompts and spot pitfalls that AI models might fall into. (That’s not to say that you couldn’t go in and learn what you need on the job, but it wouldn’t hurt.)

One of the best ways to think about being a Prompt Engineer is like being a trained chef.

You’ve learned to cook and you know the tools and the techniques, so you can walk into almost any kitchen and make it work. But if it’s a restaurant that specializes in a certain type of cuisine, then having some experience with those flavors will definitely help. It’s not essential, but it makes you more effective.

So yeah, all you need is the core skills to get hired, but your unique background and experiences will give you a unique edge for prompt engineering in relevant sectors.

Do you need a degree to become a Prompt Engineer?

Probably not.

Prompt Engineering is such a new field, that people usually take the tech industry approach, which is they only care that you can do the work, and not where you learned the skills.

So if you can come in and solve their problems that’s all they really want.

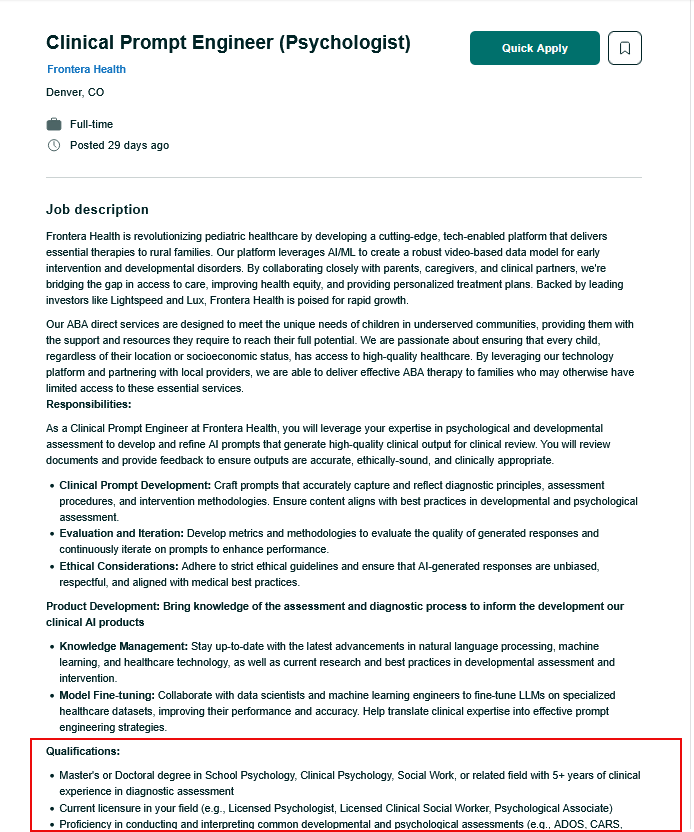

However, if it's a very specialist topic then they may want you to have either a degree or relevant experience in that particular field.

For example

This one here is building an AI function for a psychology company, so they want people with a masters in psychology AND a prompt engineering background.

Fun fact, but this one with more requirements pays $90-120k and requires 2 high levels of training and 5 years of experience, whereas the other pays $100-$180k without a degree.

(All they want is some added prompting experience and you can pick up skills like that easily. In fact, we have a course on that can teach you all you need to know:)

So like any industry, it can vary. You can definitely find great jobs that just want your prompt skills, with possibly some extra topical expertise and no degree.

Is Prompt Engineering a good career path?

In my view, the answer is absolutely, 100% yes.

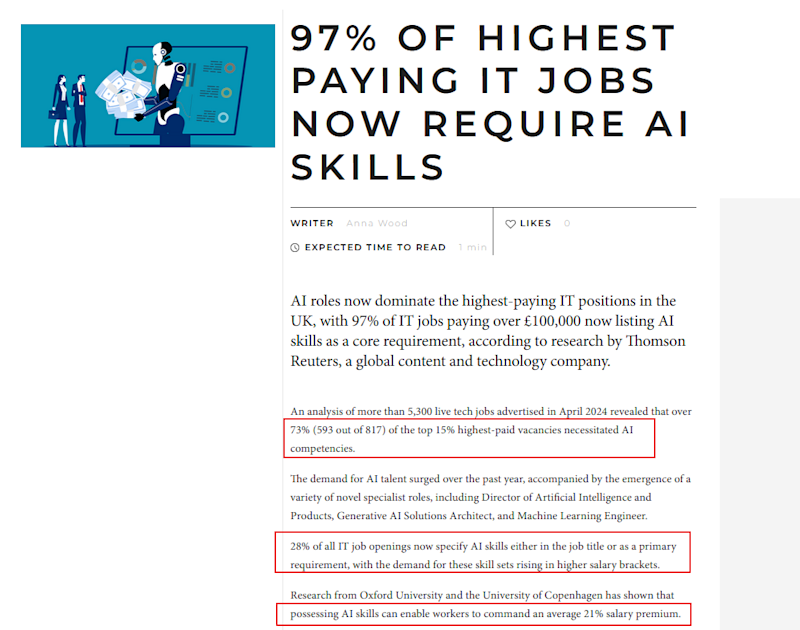

That’s because the core skill of Prompt Engineering is understanding how leading AI models (including Large Language Models (LLMs) that underlay ChatGPT) work under-the-hood and how to use them most effectively. That’s a skill that is only going to grow in importance as AI becomes more and more pervasive in all aspects of our lives.

However, there are some changes at the moment with how new the industry is, and how these things evolve.

So let me explain:

When AI tools first exploded in popularity, prompt engineering was in very high demand. Salaries were high, people wanted to use this ASAP, and there weren’t many people with hands-on skill.

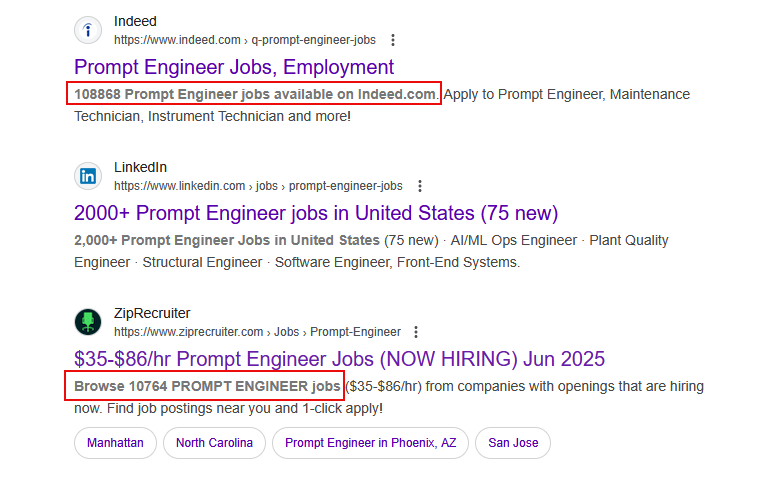

Even today there are around 10,000 open roles in the US alone, so it’s a great industry with a lot of opportunity.

However, it has slowed down since.

Not because people don’t want Prompt Engineers any more. But because many companies who were previously advertising for specialist Prompt Engineers now realize that they just want their current employees to learn prompt engineering skills on top of their existing, specialized skills.

(Maybe they didn’t understand the role enough and they just wanted to jump on board perhaps).

If anything, it just goes to show how important this skill is becoming. In that same study, they found that people who added prompting skills to their skillset saw a 21% salary increase!

Even if you learned prompt engineering and never applied for a specific role, you could upskill like crazy in your current company, or simply make your work life easier by automating aspects of it using AI and prompt engineering.

I fully expect that just like everyone expects you to be able to use Microsoft Word and Excel in the current work environment, that in the not-too-distant future every employer will expect you to have prompt engineering skills.

That’s why ultimately I believe that for most people prompt engineering is not a job in and of itself (though there are a surprisingly large amount of standalone prompt engineering jobs out there these days, as discussed above). Instead, for most people prompt engineering is “just” a skill. But it's a skill that's applicable to virtually every other job out there. It’s a skill that will supercharge your abilities to make you better in whatever your field is.

And that means if you learn this skill you’ll be in greater demand in almost any professional industry moving forward.

What is the average salary of a Prompt Engineer?

Because the industry is still kind of new, it really varies.

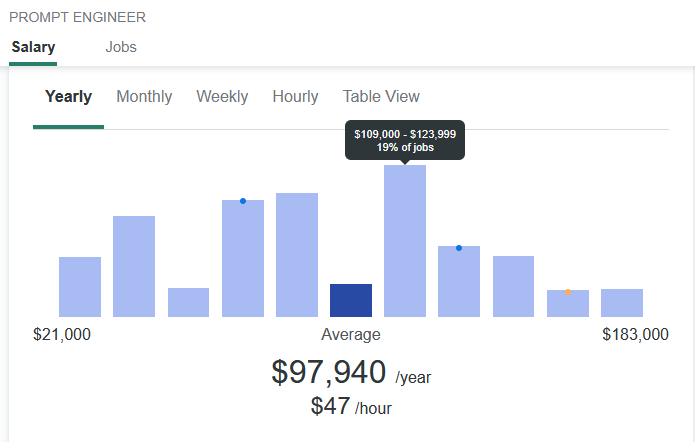

According to the 10,000+ jobs listed on ZipRecruiter, the average salary for a Prompt Engineer is around $97,000 per year:

Like all jobs though, this can vary on location and expertise, and the data is a little skewed with some outliers.

The majority of roles are around $109k-$123k a year, but you also have some around $180k, and then some at $21k which are super basic. Normally less about prompting and refining a model, and more like manual inspectors of the output as glorified QA testers.

How long does it take to learn to become a Prompt Engineer?

Honestly, not that long.

I have a course that teaches everything you need to know around Prompt Engineering, and it takes most students just 24 days to complete.

That's based on the average user only able to do about 1 hour a day, so if you can dedicate more time to learning, then you could pick it up even quicker.

And that’s with no prior coding or even AI experience either!

So even if you’ve never worked with ChatGPT or another AI before, you’ll be able to learn quickly because the course really takes a beginner-friendly, step-by-step approach through everything you need to know.

The basics of prompt engineering are surprisingly accessible. What takes more time is getting good at handling real-world tasks, such as working with messy data, unclear goals, or unusual edge cases. That part comes through experience.

But the fastest way to learn is by working on actual projects such as building tools, solving business problems, or even just experimenting with personal AI workflows. Every time you write, refine, and test a prompt, you’re leveling up.

That's why there are 8 projects in the course, including everything from fun ones like coding your own Snake Game to running your own advanced prompt tests with multiple variables and over 10,000 experiments! But you can also tweak these projects and build your own variations to get even more hands-on experience if you wanted to.

However, if you're learning on your own, then it will definitely take longer to learn. Just because you’ll have to search around for up-to-date information, figure out what you need to learn, find projects to create, etc.

The benefit is it's free to do like this, but you may take longer and get stuck in analysis paralysis and never apply for roles, which sucks.

I don’t want to leave you hanging though, so let’s break down the kind of skills you’ll need to work on.

What skills do you need to learn to become a Prompt Engineer?

Here are the core skills that matter most if you want to become a Prompt Engineer.

#1. Have a deep understanding of how Large Language Models work

Large Language Models (LLMs) like GPT-4 don’t think the way humans do, so if you want to work with them, you need to understand all the intricacies.

I don�’t have space to cover all this in depth as obviously it's a huge topic. However, here’s a quick example of some things to be aware of with regards to memory.

You see, LLM’s don’t truly “remember” anything the way we expect. Instead, they generate responses based on patterns in text, one token (essentially a word) at a time. Just like you and I have a “working memory” where you keep the things you’re actively thinking about, LLMs have the same but it’s called a context window. That context includes your prompt and the model’s reply, but only up to a certain size, which can cause memory and quality issues.

So how much memory do they have?

Well right now, most models can only handle a set number of tokens, which are chunks of text (not the same as words). Once you hit that limit, which is around 8,000 tokens for GPT-4 (or more in the 32K version), anything earlier in the conversation gets dropped from memory. Kind of like when you’re having a long conversation and it's hard to remember all the details.

However, with an LLM, this means that if your prompt is too long, or you’ve had too many back-and-forths, the model may forget key details from earlier.

This shows up in real tasks all the time.

A model might:

Start strong but then lose track of instructions partway through

Misinterpret a final step because earlier definitions fell out of context

Repeat itself or change tone midway through a longer output

Not only that, but you’ll also notice that models tend to give more weight to the most recent instructions, especially ones near the end of a prompt. So if you tack something on at the end like “make sure this sounds formal,” that final instruction can override earlier tone settings.

This kind of behavior isn’t obvious when you’re using ChatGPT casually, and so the day-to-day user isn’t even aware of this, or that it’s happening.

In fact, you’re still getting an output so you might assume it's all fine. However, if you’re building tools, training workflows, or designing outputs that need to be consistent, accurate, and format-specific then this can totally derail your project.

For example

Let’s imagine that you’re designing a multi-step prompt to extract key terms from legal contracts, explain them in plain English, and format the result as a JSON object.

You test it with a short document and it works great, but when you try it on a real contract that’s ten pages long, the output breaks halfway through. Perhaps some explanations are missing, the formatting is off, or it starts summarizing instead of extracting.

That’s a context issue where the model hit the token limit, forgot earlier instructions, and started guessing.

The average user has no idea, but a Prompt Engineer expects this to happen and builds with it in mind so that it doesn’t break things. They might:

Shorten the input (prompt)

Put limits on the output length

Utilize models with different context window lengths

Test models for better recall of “needle in the haystack” details

Automatically prompt the model to summarize the conversation into the salient details, and then supply those details in a new context window

Shift the structure to keep key constraints near the end

But you can only do this when you fully understand the processes and limitations of the tools.

#2. Learn how to write clear, structured, and repeatable prompts

This is one of those things that seems simple - and that’s because it is. But it’s also something that people do poorly all the time, even when they know it’s simple.

The way you phrase a prompt has a huge impact on what you get back. That might seem obvious, but once you start using AI tools for real tasks, you’ll see how easily things go off track when your instructions aren’t precise.

This is because language models don’t read between the lines. They’re not human, they just sound human. They don’t know what you meant, they respond to what you wrote. That’s why prompt engineering is really about structured thinking. You need to clearly define the task, shape the format of the output, and give the model everything it needs to succeed without overwhelming it.

This is especially important in professional settings, where outputs need to be accurate, repeatable, and easy to plug into a larger workflow.

For example

Imagine you're working with a law office that wants to analyze contracts and flag clauses that introduce long-term risk.

Here’s a prompt someone might start with:

“Highlight risky clauses in this contract.”

That’s too vague though.

What counts as risky?

What should the model return? A highlight? A list? A summary?

Without specifics, the model will guess and you’ll get inconsistent results. So a Prompt Engineer would break it down into clearer instructions.

Perhaps something like:

“Read the following contract and identify any clauses that could present long-term risk to the client”

“Define 'risk' as clauses that create financial penalties, automatic renewals, or lock-in periods longer than one year”

“For each clause, return the full clause text and a brief explanation of why it's considered a risk”

“Format the response as a numbered list in markdown”

Now the model knows how to reason through the task, it has a definition of “risk,” a structure to follow, and a format that’s easy for someone else to review or reuse.

See the difference?

Even then we can take it further…

#3. Learn advanced prompting techniques

Once you understand how these models work and are comfortable writing clear, structured prompts, the next step is learning more advanced prompting techniques in order to handle more complex tasks or improve the models abilities.

So let’s break a few of them down.

Few-shot prompting

Few-shot prompting is when you give the model a few examples of what you want before asking it to do the task. This way you improve the chances of getting the desired output.

You can think of it as training the model on-the-fly (referred to by Prompt Engineers as “In-Context Learning”, because they’re learning inside the context window. You’re not updating the model’s weights like in machine learning, you’re just showing patterns it can follow within the same prompt.

This technique works best when:

The task is slightly subjective or nuanced

The response needs to match a particular format

You want consistency across many examples without writing custom prompts for each one

For example

Let’s say your team has thousands of customer reviews, and you want to organize them so you can quickly identify negative feedback and pass it to your support team. How would we do this?

Well we could try and get the model to find these, but tone and sentiment are difficult for an LLM to always understand. So rather than just asking it, we can also use few-shot prompting to give it clear examples of each sentiment to learn from first.

It could be something like:

“Classify the following reviews as Positive, Negative, or Neutral:

Review #1: “The checkout process was seamless and fast.” Sentiment: Positive

Review #2: “I had to refresh the page three times before my order went through.” Sentiment: Negative

Review #3: “Shipping time was fine, nothing special.” Sentiment: Neutral"

By doing this, you’re showing the model how you want it to classify, and not just telling it what to do. It can then see your reasoning in the examples and matches that pattern in the next response.

You can even refine it further, by adding in more examples and helping it to understand the first batch that it works on. This way you’re working alongside it to clarify edge cases or train it to follow stricter criteria.

TL;DR Few-shot prompting helps you get reliable output without fine-tuning a model or writing extra code. If you set up a prompt like this inside a spreadsheet or automation tool, you can run the same pattern over hundreds or thousands of entries. This saves time and ensures your classifications are consistent.

It’s especially useful when building internal tools or workflows where predictability matters more than creativity.

Chain-of-thought prompting

Chain-of-thought prompting is when you ask the model to reason through a task step-by-step instead of jumping straight to an answer. This works especially well when a task involves logic, multiple conditions, or any situation where the model might otherwise make a shallow guess.

Unlike few-shot prompting, which shows examples of what the output should look like, chain-of-thought prompting tells the model how to think before it answers. You’re guiding the process, not just the format.

This technique is especially useful when:

The task involves multi-step reasoning

You need the model to justify its answers

The output depends on interpreting rules or conditions

For example

Let’s say you’re reviewing a policy to check whether it complies with a company’s standards.

You could just ask: “Does this policy meet the compliance checklist?”

Again though, this is a little vague, and the model might simply say yes or no, without showing why. That’s risky, especially if the decision affects legal or financial outcomes because it might just be guessing or hallucinating.

So instead, you can write:

“Read this policy and evaluate it against each of the five compliance standards listed below. For each item, say whether the policy meets the standard and briefly explain your reasoning. Think through each one step-by-step.”

Now, you’re not just getting an answer, you’re also getting the logic behind it.

Handy right?

The interesting part is that studies have shown that these models actually perform better when they have to think through their actions step-by-step. And as a Prompt Engineer, you’re always thinking about the empirical ways to elicit the type of behavior you want to see in your model; in this case, you’re trying to elicit reasoning.

This kind of step-by-step thinking is especially useful when:

You’re handling rules, calculations, or checklists

You need to audit or review the model’s logic

The answer depends on the context or order of reasoning

It also helps reduce hallucinations, because when a model is forced to break things down, it tends to be more accurate, less confident about guesses, and easier to debug when something goes wrong.

You’ll see this used in education tools, legal automation, workflow audits, and even in customer support systems that have to follow branching logic based on what a user says.

Prompt chaining

Prompt chaining is when you break a complex task into smaller, focused steps by running a sequence of prompts.

It sounds similar to the last method right? But the difference is, we don’t give it all the prompts as steps up front, as that can overload the tool and make it try to focus on the last thing.

Instead, we give it one prompt, get the model’s output, and then do some operation on that output (e.g. transform it into some other format or analyze it for accuracy) and then pass it on to the same or another AI model along with a new prompt.

Why do this?

Because it’s especially useful when working with complex tasks.

Rather than relying on one mega-prompt to handle everything, chaining lets you design a clear process where each prompt solves a specific part of the job and has built-in safety or QA checks. That gives you control, makes the system more reliable, and allows you to handle larger or more complex data without running into memory issues.

It also means you can test and improve each step separately, just like how developers structure modular code.

For example

Let’s say you work on a business development team that regularly receives RFPs from enterprise clients. These are huge documents that are often dozens of pages. All of which contain technical requirements, legal language, and vendor comparison tables.

The goal is to extract key requirements, check your company’s ability to fulfill them, and draft custom responses that align with your existing product stack, which as you can imagine is a lot of effort and something you would want to try and get AI to help with.

The problem of course is that trying to do that with one prompt won’t work, because it’s too much information, and the stakes are too high for vague or incorrect answers.

So instead, you chain the process:

Prompt 1 – Requirement extraction

“From the following section of the RFP, extract a structured list of client requirements. For each, include a short label and full description. Output as JSON.”

Prompt 2 – Capability mapping

Then you would take the list of extracted requirements and run them through a second prompt:

“For each client requirement, indicate whether our current product or service can meet it. If yes, include a one-sentence explanation of how. If not, flag it as a gap.”

Prompt 3 – Response drafting

Now you take only the approved requirements and generate draft responses:

“Using the approved requirements and product capabilities, write a professional RFP response in our brand tone. Use short paragraphs, clear headers, and avoid technical jargon.”

It’s just 3 prompts, but this could let a small team process large RFPs faster and with more consistency, without losing control of the quality.

This alone could save them literally tens of thousands of dollars per year.

Scaffolding and output formatting

Once you’ve figured out how to prompt a model to get the kind of content you want, the next challenge is getting that content back in a format you can actually use. That’s where scaffolding comes in.

Scaffolding is about setting up a structure in your prompt that guides the model to return its output in a predictable format i.e. something that’s consistent, easy to parse, and ready for the next step in a workflow. Without this, even a perfectly written prompt can produce answers that are hard to work with, especially at scale.

You might think of it as templating, but it’s more flexible than that. A good scaffold tells the model what kind of information to return, in what structure, and how to format the sections. Done right, it saves time, reduces post-editing, and makes the results usable by other people, tools, or systems.

For example

Let’s say you’re helping a research team generate executive summaries from a batch of scientific papers. However, you don’t just want a generic summary. You need specific pieces of information pulled out and returned in a usable format.

You could just ask the tool to

“Summarize this paper.”

But by being lazy like this, the model will decide what to include, how long to make it, and what to emphasize, so you’ll get variation in quality every time.

The solution would be to scaffold it so we get repeatable results:

“Read the following abstract. Then return the following sections:

Main research question

Key findings

Methodology used

Any limitations or open questions

Return your response in bullet point format using markdown.”

Because every summary now follows the same pattern, you could plug that straight into a slide deck, database, or internal dashboard.

Even better?

If your team later wants to add a “Cited sources” section or remove “Limitations,” you would only have to tweak the scaffold, and not rewrite the whole prompt logic.

TL;DR

Scaffolding is how prompt engineers avoid edge cases where the model rambles, forgets instructions, or formats things inconsistently. It’s a low-effort way to enforce structure and reduce errors — especially when results are being reused by people who weren’t involved in the prompting!

Like I say, there’s a big difference in how Prompt Engineers work, and how beginners use the tools, and this is just scratching the surface.

How to become a Prompt Engineer (step-by-step roadmap)

So now that you understand what a Prompt Engineer actually does, let’s walk through how to get skilled up and hired in the role.

The good news is that it’s actually pretty straight forward!

Step #1. Learn Prompt Engineering

Obviously you need to learn how AI works under the hood and how to prompt it effectively, particularly Large Language Models, if you want to be a Prompt Engineer. The good news is you can take my course to learn everything you need to know.

It’ll not only teach you all the skills, but it will give you project work that you need for the next step.

Time to complete: 24 days

You can actually check out the first 5 hours of this course in the video below for free!

Step #2. Build a portfolio + GitHub profile to prove you can do the work

Once you’ve completed the course, you need to build a portfolio to show off your work.

Why?

Well if you remember earlier, I said that a lot of companies (especially in tech) don’t care about degrees. What they care about is that you can do the work, so you need to prove that and be able to show them what you can do.

This means creating a project portfolio to show your completed projects. The good news is you will have done some basic ones in the course above, so make sure to add those as well as any other projects you’ve built. You can even tweak a few if you like

Don’t worry too much about having a ton of projects though. Just having a few to show you can do the work is enough to get you started.

This can be as simple as a WordPress site with your projects listed, the problem, the goals and what you did. Also, you don’t need to give too many details on the site. It’s more to showcase so you have things to chat about in the interview.

This course will run you through how to build your portfolio site:

Time to complete: 10 days

Now that you have this built, it's time to apply for roles and get hired!

Step #3. Apply for roles

Some people will tell you to apply for internships and things like that so that you get in-person experience.

Here’s the thing though: unless it’s your absolute dream company, and it’s the only way you’ll get your foot in the door, then don’t bother with internships.

Just apply for Junior Prompt Engineer roles right now instead, as this is the best way to get hands-on experience, and they will pay far better, so don’t undersell yourself. Apply now, and then move onto the next step so you can get the role once they ask you for the interview.

Also, if you follow the projects from the courses that we’ve shared above, most companies will be blown away by your current skill level. (This is a common quote from our students).

Check out this course on how to .

Time to complete: 5 days

It teaches you how to create a kick ass resume, get more interviews and blow the interviewers away.

You can start applying for jobs now. However, there is something else you need to do after you send those first applications.

Step #4. Prepare for the technical interview

We already know that tech roles are slightly different in that they don’t care about a degree - they just care that you can do the work.

Well part of that is having a portfolio. Another part is having an interview process where they ask you technical questions about the role, to prove you can do the job.

It can vary based on the company, but the process is usually:

The initial application and sending your portfolio

Either an online quiz when you apply (although not everyone will do this). It’s a simple filter to see if you’re worth spending one on one time with, as they get thousands of applicants, or a quick interview with someone from HR just to chat with you

An in person technical interview where they ask you how to solve specific Prompt Engineering focused questions + any niche topical questions

Potentially a small project to complete that would replicate your daily work. This will give them an idea of how you work, as they want to hire people who are not only capable but can deliver on time

A behavioral interview to see if you would make a good team fit

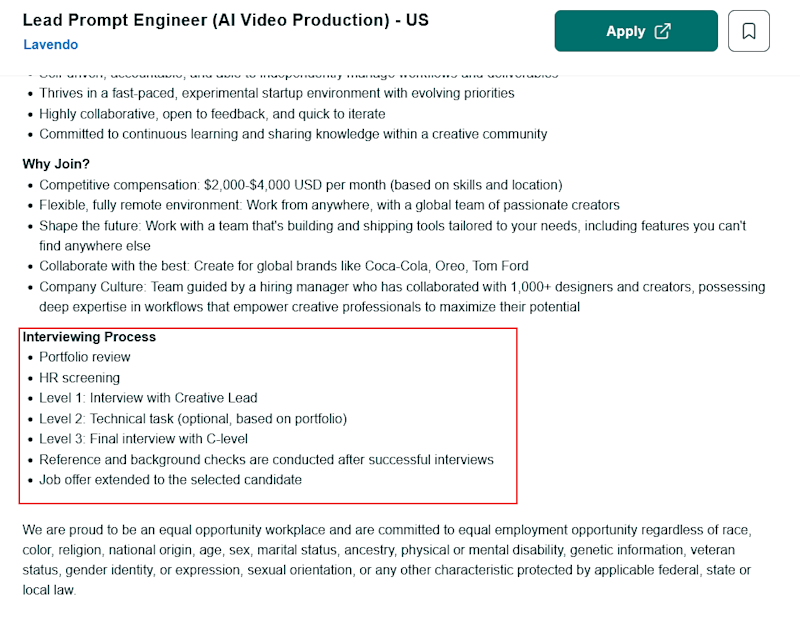

In fact, one of the jobs earlier even posted their application process information in the job listing that you can check out below:

The most difficult part is the technical interview, but as long as you understand the topic and can answer questions you’ll be fine.

Also, it's important to remember that they don’t really care about perfect ‘textbook’ answers. They just want to see how you might approach problems, and see if you have the capability to do what they need.

That being said, if you’re applying for a prompt engineering role that requires niche expertise, then you might also get questions around that topic i.e. finance questions or even coding questions if it's a coding based project. If that is the case, then it’s definitely worth learning about data structures and algorithms, as these are the most common coding fundamental questions they will ask.

Time to complete: 40 days (assuming you need it for your particular application)

It’s also worth being aware of common best practices when applying for roles, similar to how you would with any other job interview.

Remember that tech jobs are more than just tech skills

In addition to the technical know-how that you’ve built up through courses and certifications, interviewers will be evaluating your soft skills and how well you can communicate, so:

Be prepared with examples showing how you’ve collaborated with co-workers or led projects in the past if possible

Be able to explain the decisions you made for the projects in your portfolio and discuss various trade-offs that you made

Make sure to demonstrate strong communication skills in writing and during the interviews (whether virtual or in-person). Even very basic things like using proper grammar and having no spelling mistakes, and sending a thank you email within 24 hours of your interview, etc

Don’t skip the usual interview prep

Like any other kind of interview, it’s always good to:

Research the company. Learn what you can about their needs and why they’re hiring for your role

Learn what you can about the people you’ll be interviewing with, and what their potential areas of focus will be. You can always ask when they offer the interview, and they will happily let you know

Practice, practice, practice. Do a mock interview with friends or family, or even just interview yourself, speaking your answers out loud. It’s amazing the difference this makes, and how much more polished you’ll be on the big day

Be on time (or even a little bit early) for the interview

Dress the part. Figure out the norm for the company’s culture (jeans and T-shirt or more professional?) and dress to fit in. If you’re unsure, err on the side of dressing up

Do all this, and you’ll smash the interview and get the job!

Step #5. Add to your skillset + learn Python automation

By this point you should already be working as a Prompt Engineer.

Great job!

However, it doesn’t hurt to add additional skills to your skillset to make your life easier.

If you remember earlier, I mentioned that you don’t need to learn to code to start as a prompt engineer, and that's true. However, if you pick up the basics then you can start using some coding skills to help use other AI tools alongside your work.

Time to complete: 32 days.

I recommend Python for a few reasons:

It’s relatively easy to learn

It’s widely used - especially in AI and AI tools

It’s also perfect for automation projects

You can take this course with zero coding skills and be amazed at how helpful it can be.

It’s kind of like how once you learn Prompting, you can speed up tasks. But with scripting, you can take repeated tasks that you do day to day, write a script, click a button, and have it do it for you.

And that's it for the roadmap to becoming a Prompt Engineer

Like I said up top, its fairly straight forward:

Total time to complete steps #1-3 and get hired: 39 days (or just over 1 month!)

Total time to complete entire roadmap with optional bonus steps #4-5: 111 days (or just under 3 months)

Not bad for a completely new career right!?

What are you waiting for? Become a Prompt Engineer today!

There’s never been a better time to train and learn prompt engineering. In my view it’s one of the best things you can do for your career, regardless of whether you’re looking to work as a Prompt Engineer or want to learn prompt engineering skills to advance your existing career path.

It’s clearly becoming one of the key skills now and in the future - regardless of industry.

I’ve given you all the steps and resources you need to get started and get hired above. If you can only manage 1-10 hours a week, you can still start this career within 1-3 months, and even quicker if you can spend a few more hours per week

What do you have to lose? Get started now!

P.S

Every course that I just shared is all included in a single ZTM membership. Once you join, you get access to all of them and more.

Better still?

You also have access to the private ZTM Discord community, where you can ask questions from me, as well as your other teachers, other students, and even current working Prompt Engineers.

Best articles. Best resources. Only for ZTM subscribers.

If you enjoyed this post and want to get more like it in the future, subscribe below. By joining the ZTM community of over 100,000 developers you’ll receive Web Developer Monthly (the fastest growing monthly newsletter for developers) and other exclusive ZTM posts, opportunities and offers.

No spam ever, unsubscribe anytime