Don’t you just hate having to do repetitive work? It’s one of the things that frustrates me the most, because I know there has to be an easier or more efficient way of doing these tasks, so I can spend my time on more important things.

Which is why a few years back, I decided to learn how to use Large Language Models properly with ‘Prompt Engineering’ and it changed everything.

Almost overnight I learned how to:

Speed up my workflow

Reduce errors

And even enhance my creativity

The good news is that you can use this too!

In this guide I’ll cover what Prompt Engineering actually is, how it works, the most exciting reason why I think you should learn it outside of the huge salaries, and more!

Let's dive in…

Sidenote: If you want to dive deep into Prompt Engineering and learn how to actually work with LLMs, then check out my complete Prompt Engineering course:

You’ll learn everything you need to know to go from a beginner to someone who knows how LLMs work and how to use them effectively for your work and personal life better than 99% of others out there.

With that out of the way, let’s get into this guide!

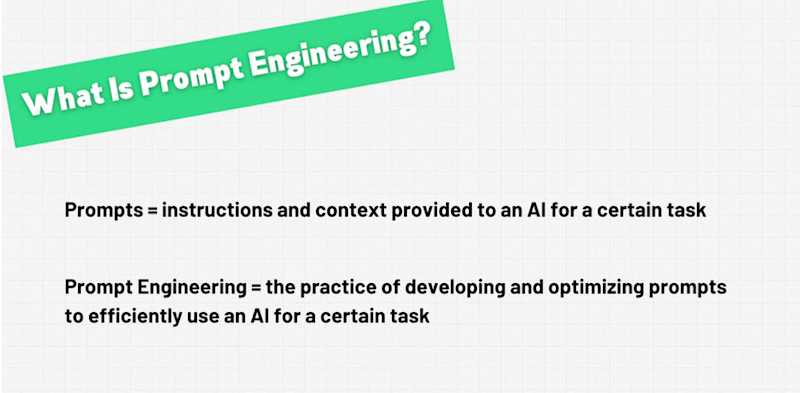

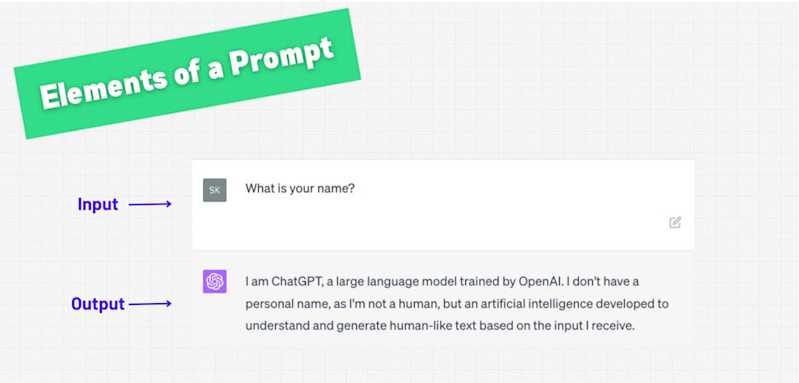

What is Prompt Engineering?

At its core, Prompt Engineering is about giving detailed instructions to LLMs, to ensure you get exactly what you want from an A.I.

It may sound complex, but it’s something you’re likely already doing in everyday life, at least on a basic level.

For example

Imagine you’re at McDonald’s and you want to order a Big Mac combo meal with Diet Coke and extra pickles. You could walk up to the cashier and say, “Me want food!” but chances are high that that wouldn’t give you the desired outcome.

So instead of talking like a caveman, you’re more specific: “I’d like a Big Mac meal with a Diet Coke and extra pickles, please.”

This clarity in your “prompt” helps the cashier understand exactly what you want, so you get your order just as you imagined it.

However, this interaction has a few built-in assumptions, and it’s at the core of why beginner LLM users struggle:

You assume the cashier knows what a “Big Mac meal” includes (the burger, fries, and a drink)

You expect that the extra pickles will go on your Big Mac, not in your Diet Coke

You also expect that if there’s any confusion, the cashier will ask for clarification

This is fine at McDonalds where you're dealing with logical people, but with Prompt Engineering, you’re dealing with an AI, not a human. So the types of assumptions you can make are going to be different.

Machines don’t come with shared experiences or an ability to ask clarifying questions unless you specifically instruct them to. That’s why the quality of the AI’s response often depends on the quality of your prompt. The clearer and more specific you are, the more accurate and useful the output will be.

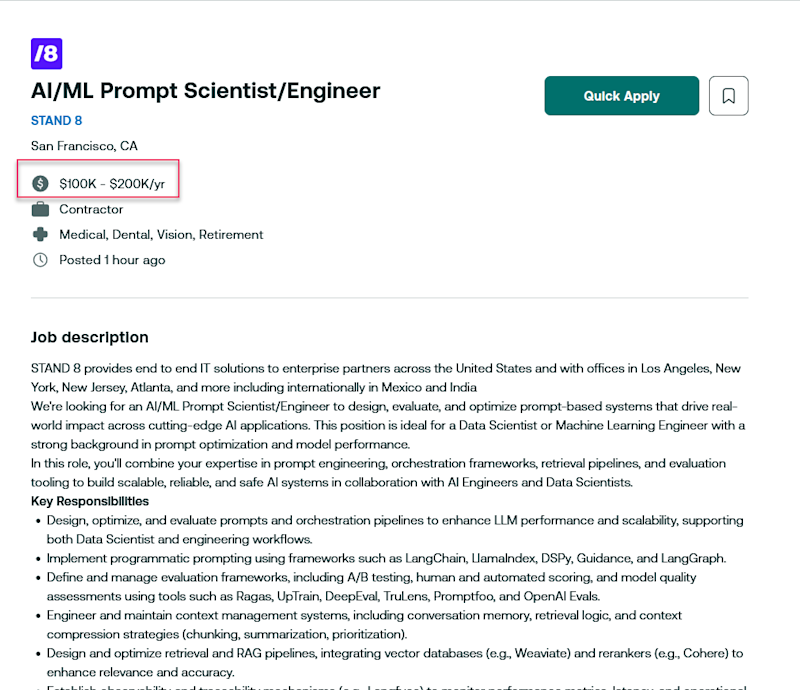

Basic users can get basic results, but with a deeper understanding of Prompt Engineering, you can unlock the full potential of these tools. This is why companies are beginning to see the value in this skill, and why “Prompt Engineers” is an increasingly relevant skill.

Rather than just being subject experts, they also know how to craft prompts that yield more accurate, creative, and useful responses, transforming the AI from a simple tool into a powerful, adaptable assistant.

This not only saves you and them time but also cuts down on errors that come from manual repetition. AI makes errors, but so do humans!

To be clear, in my view Prompt Engineering is not a job in and of itself, except for a handful of special cases. Instead, it's a skill. But it's a skill that's applicable to virtually every job.

And while I don't think AI will be taking over your job anytime soon, someone that can do your job faster and more effectively by using AI...well, they might be able to take over your job.

Why should you learn Prompt Engineering?

I’ve already mentioned that it's a key skill for virtually any job, but the most interesting reason why I think you should learn this skill has nothing to do with these things.

So let me explain:

Right now, LLMs represent a complex frontier in AI, where we’re seeing unexpected improvements once a model reaches a certain level of complexity. Almost like they're evolving...

Erm what?

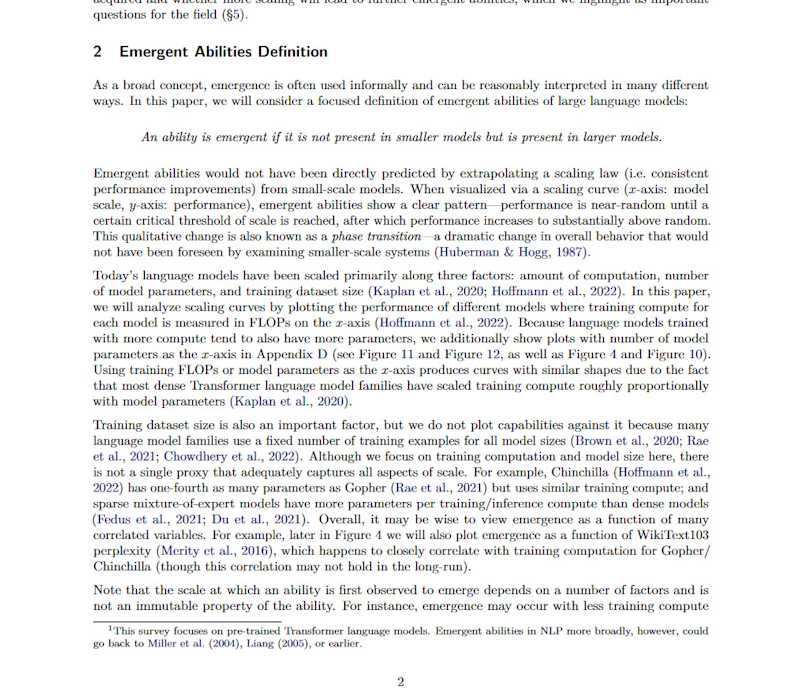

Basically, researchers at OpenAI and beyond have observed "emergent abilities", where LLMs seem to suddenly “click” into understanding tasks only once they reach a certain threshold, even if they struggled with those same tasks in the past.

This odd behavior hints that LLMs may not only learn predefined tasks but potentially make intuitive leaps, generalizing their training in ways we don’t fully understand.

For example

In this study by Google, Stanford, and others, each graph shows a specific skill or domain.

Along the x-axis of each graph is the model size, increasing from smaller to larger

Along the y-axis of each graph is the model’s ability at that specific skill or domain

As you can see, model capabilities do not improve linearly. Instead, they may be flat at first, but then at a certain model size the abilities emerge.

However, it’s not just about feeding these models more data that causes these abilities to emerge - it's also about how we interact with them.

You see, through effective Prompt Engineering we can guide models to access and apply their latent abilities, intentionally drawing out these "emergent" capabilities or enhancing their performance on specific tasks.

In other words, Prompt Engineering isn’t merely asking the model a question; it’s about crafting inputs that help the AI tap into its most relevant layers of knowledge, producing more accurate, insightful, or creative outputs.

How can this be?

Well, it’s because Prompt Engineering involves crafting inputs that guide LLMs to access the most relevant and useful layers of their capabilities, teasing out responses that go beyond basic training.

While a poorly designed prompt might yield straightforward answers, a well-thought-out one can inspire LLMs to handle more complex reasoning and make abstract connections. The process feels more like an exploration, uncovering the model’s “hidden” abilities.

This means that for developers, learning Prompt Engineering is more about developing a shared language with the model. It transforms interactions from basic input/output, into a form of collaboration, maximizing the model’s potential.

The more we do this, the better the results over time. If that’s not an exciting reason to get into this, I don’t know what is!

Oh wait, there’s one more reason…

Is Prompt Engineering the next programming language?

Kind of yeah but before you grab your torch and pitchforks, let me explain.

As you know, programming levels are progressively higher levels of abstractions from machine code:

Machine code is binary code (0s and 1s) that the CPU can directly execute. This is the lowest level of programming, as there’s no abstraction

Then there’s low-level languages, like C, that offer some abstraction from machine code but still allow programmers to manage memory and control hardware directly

Then there’s mid-level languages like C++ that offer both high-level and low-level features, allowing some hardware control but with enough abstraction to simplify many tasks

Then the most popular 'beginner friendly' programming languages like JavaScript and Python are high-level languages. They provide powerful abstractions for complex operations, letting programmers focus more on functionality and logic than hardware details

And then finally we have things like frameworks like React and Vue for JavaScript, as well as libraries like NumPy and Pandas for Python. These provide an even higher level of abstraction by utilizing pre-written code, structures, and tools that simplify common or complex tasks

As you can see, each step on this path is another step further away from the machine code. However, one of the defining concepts each time is that each level of abstraction allows you to greatly simplify tasks, because you can simply use more natural language (i.e. English) to code, which is then compiled down.

With that in mind, you can kind of think of Prompt Engineering as the next level of abstraction, where we’re now “programming in natural language” (but with knowledge of how things work).

Heck, if you’re using ChatGPT's code interpreter tool, you literally prompt it in plain language, it writes in Python, and then compiles down.

Of course purists would say prompt engineering isn’t “real” coding, and I’m not here to disagree. But similar arguments were no doubt made when higher-level languages like Python started coming out by those who were adept at lower-level languages like C and C++.

History doesn't repeat, but it does rhyme.

Nor am I saying that you don’t need to learn how to code or learn programming languages to do the job. You definitely should still learn them.

However, don’t skip out on the opportunity to also make your life easier, and make you far more valuable in the eyes of recruiters. If I would learn any new skill going into 2026 it would be this.

So with that being said, let's dive into the best Prompt Engineering techniques to use (and what to look out for).

Prompt Engineering Fundamentals

In this section I’m going to break down 4 of the fundamentals you need to be aware of when getting started with Prompt Engineering as a complete beginner.

Oh and as an added bonus? I was feeling generous so I shared the first 5 hours of my 22+ hour Prompt Engineering Bootcamp course over on the ZTM Youtube channel.

Check it out below for free!

I cover a lot more in the video and in my course, but here’s the mile-high overview of each.

Tip #1. Context is king

Context essentially allows you to "train" a custom model to perform the tasks you want.

For example

If you asked a human to help debug your code but didn't provide them with your code, or what error you're getting, they're not going to be able to help you right? Well it's the same with LLMs.

Context sets the stage by providing relevant background information to the LLM. This could be the code you’re working on, the nature of the dataset you’re working with, the specific problem you’re trying to solve, or the goal you’re aiming to achieve.

Let's go back to our McDonalds example. Let's say this time it was an alien taking your order. You'd probably want to provide a whole lot more context:

I’d like a meal that includes a Big Mac, which is a sandwich made with two soft bread buns holding two cooked beef patties, a slice of cheese, chopped lettuce, sliced pickles (provide multiple additional pickles), diced onions, and a special sauce

On the side, I’d like fries, which are thin strips of potato fried until golden and sprinkled with salt

To drink, I’ll have a Diet Coke, which is a cold, bubbly, dark brown drink

These items together form a "meal", and therefore I will pay less than I would if I ordered them each separate

Okay, that's a bit excessive... but it's an alien, so it really does need to understand the full context if I want to get the right order! Well, the same applies when prompting an LLM for any task, including coding. You need to provide the model with context in order to ensure its output is useful for you.

For example

Let's say you want help writing analyzing data we have using Python. We could start by providing the relevant context to the LLM, which serves as something like a pre-amble to our prompt:

I would like you to assist me with writing Python code. I am a beginner developer, so please ensure all code you provide is explained fully and includes detailed comments.

My goal is to analyze a dataset, which is in CSV format. This dataset contains columns for ‘Date,’ ‘Product,’ ‘Quantity Sold,’ and ‘Revenue.’ Each row represents a sales transaction with data on what was sold, how much, and the revenue generated.

Here's the cool thing: in a way, you've just custom-trained the LLM for your own task! This is something called "in-context learning" and research shows it significantly improves model performance.

As a quick, high-level explanation: models are trained on vasts amounts of data and this training causes them to "learn" information by adjusting the calculations inside the model.

Those calculations are frozen and can't be changed by you, but by providing context when prompting you are getting around that limitation by changing how the model runs its calculations at the time you give it your prompt - in essence, allowing the model to "learn" new information that is relevant to your specific task.

We could've just jumped right into the instructions and not provided any context. It certainly would've seemed quicker. But we'd likely spend more time going back-and-forth with the model or fixing mistakes after-the-fact.

Like most things in life, a little bit of up-front work makes everything that follows much easier!

Tip #2. Specificity is vital

So far we've provided the LLM with the relevant context. Now it's time to give it our instructions.

However, being specific in your task description is critical because vague instructions often lead the AI to interpret your requests in ways you didn’t intend, resulting in outputs that might miss the mark or the AI may fill in the gaps in ways you didn't expect.

By being precise and detailed in your prompts, you guide the AI toward producing results that align closely with your needs.

For example

Think back to our McDonalds ordering.

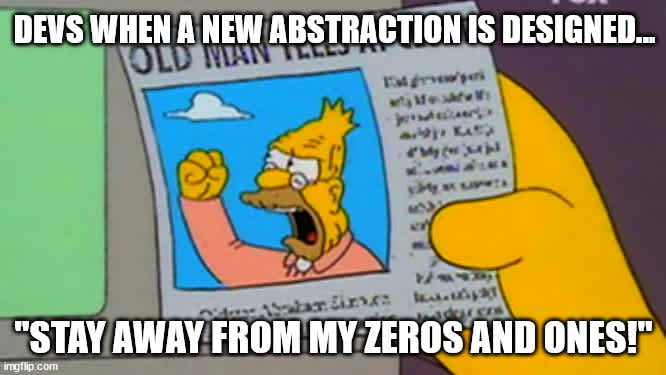

We probably thought we were being perfectly clear when we said “I’d like a Big Mac meal with a Diet Coke and extra pickles, please”.

But remember, there was some vagueness:

We didn’t specify that we expected french fries, since we assumed they would know that a “meal” includes french fries

And we didn’t specify we wanted the extra pickles on the Big Mac, rather than in our Diet Coke

These all seem obvious to you and I, and to the person working at McDonalds. But would it be obvious to a person from another country who had never seen fast food before? Maybe not.

So think of AI as that person.

Although full disclosure, yes it is obvious to an AI:

But the point stands, particularly for more complex tasks like coding. You need to think through the ambiguities and try to be clear and specific.

For example

Asking the AI to "create a website" could result in a wide variety of outputs, some of which might not meet your requirements at all.

But if you specify, "Generate boilerplate HTML, CSS, and JavaScript files for a simple website with a header, footer, and three content sections," now the AI has a much clearer idea of what you’re looking for.

Better still, this specificity not only saves you time by reducing the need for revisions, but also minimizes frustration by ensuring that the AI’s output is more likely to meet your expectations from the start.

Handy right?

However, being specific doesn’t just benefit the AI; it also benefits you by making the entire process smoother and more efficient. When the AI understands exactly what you want, it can deliver exactly that, freeing you to focus on refining and building upon the results, rather than fixing them.

And before you think “I know how to be clear and specific already!”, let me tell you about something you almost certainly already do: the X-Y Problem

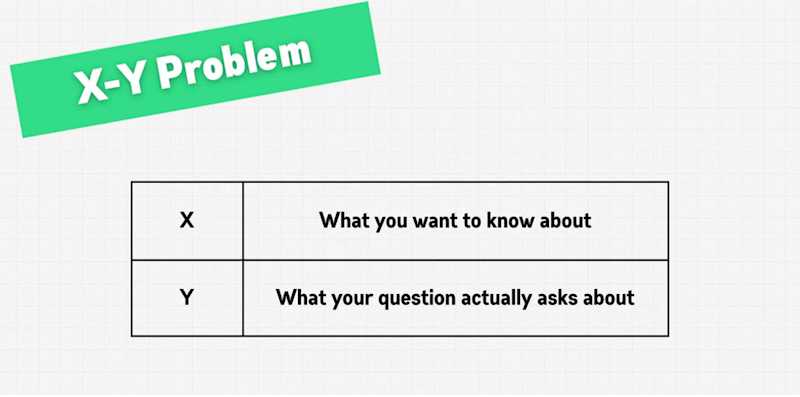

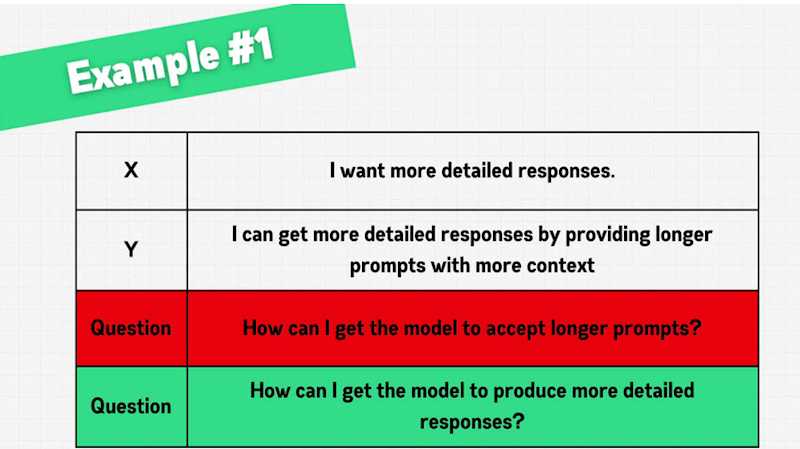

The X-Y problem is a classic miscommunication scenario where someone asks for help with a solution (Y) to an issue instead of describing the original problem (X).

This happens all the time in human communication, and will often drift over into our Prompt Engineering.

For example

Suppose a developer wants to sort a list of items and remove duplicates, but they’re only familiar with sorting functions, and not de-duplication methods.

Instead of asking:

"How can I remove duplicates from a sorted list?" (the actual problem, X), they ask,

"How can I sort my list to make duplicates easier to remove?" (the workaround, Y)

Since they "don't know what they don't know", the developer thinks the best way to remove duplicates is to sort the list first to make duplicates easier to identify, and then write code to manually iterate through the sorted list and remove consecutive duplicates.

Whereas if they had explained the original problem of removing duplicates, they would learn about faster and more effective solutions for de-duplication.

Tip #3. Zero, One, and Few-Shot Prompting

Sometimes, the most effective way to get the result you want is by showing the AI exactly what you’re looking for.

For example

If you’re asking the AI to output your data in valid JSON format, give it an example of the initial raw data being converted into exactly the JSON formatting you want.

Example Raw Data:

Name: Jane Doe

Age: 32

Occupation: Engineer

Skills: Python, JavaScript, C++

Projects:

- Project Name: AI Chatbot

Year: 2022

Technologies: Python, NLP

- Project Name: Web Scraper

Year: 2023

Technologies: JavaScript, Node.jsExample JSON:

{

"name": "Jane Doe",

"age": 32,

"occupation": "Engineer",

"skills": ["Python", "JavaScript", "C++"],

"projects": [

{

"project_name": "AI Chatbot",

"year": 2022,

"technologies": ["Python", "NLP"]

},

{

"project_name": "Web Scraper",

"year": 2023,

"technologies": ["JavaScript", "Node.js"]

}

]

}We call this "one-shot prompting" because we provided one example.

However there's other techniques you could use.

When you provide a vanilla prompt with no examples, that's "zero-shot prompting"

And if you provide multiple examples it's called "few-shot prompting"

In general though, the more 'shots' or examples you give, the better. However, I recommend that you scale up or down depending on the complexity of your task and how important accuracy is for your task.

For example

If your task is relatively simple and accuracy is of minimal importance (i.e. you will be reviewing the work), zero or one shot is sufficient.

Conversely, if you have a complex task or if it's very important that everything be as perfect as possible, multiple shots is best. I'd recommend at least 3, as research shows that accuracy generally improves up to 4-8 shots.

"Shot prompting" works because it reduces ambiguity and aligns the AI’s output with your vision. Also, the simple act of providing examples helps you think through exactly what you want the LLM to provide, and often that process itself is beneficial.

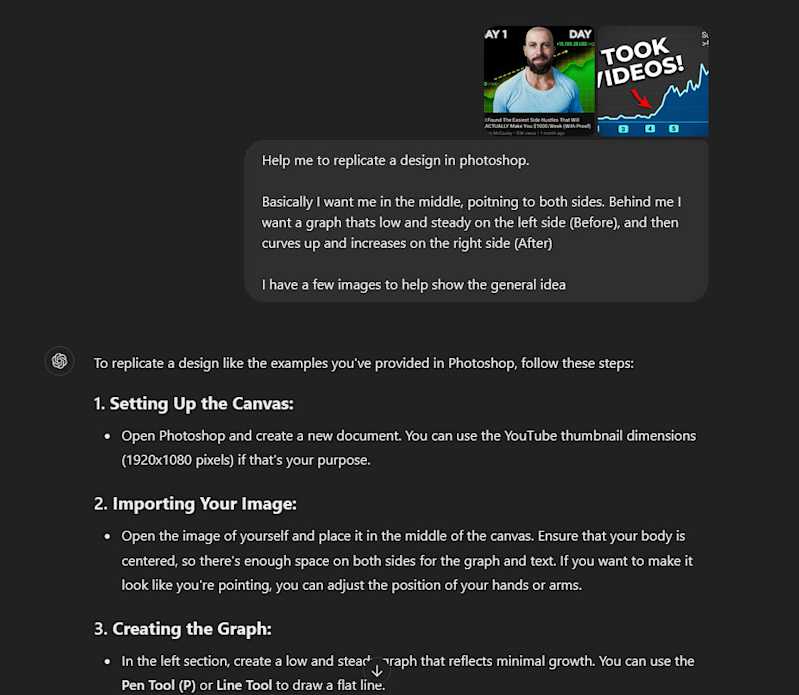

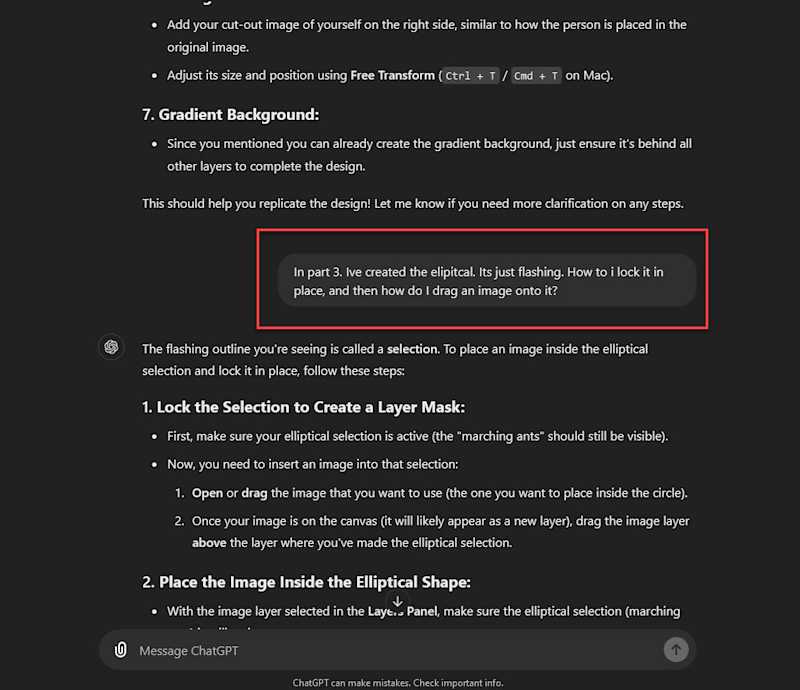

Editor's note: I’ve been learning to use Photoshop on the weekends, and wanted to replicate a design I saw, so I did this exact same thing. I told ChatGPT my goal, which elements I wanted to replicate in my own design, my level of editing experience, and then shared multiple example images.

The tool then walked me through the features inside of Photoshop to get 80% of the way there. Then I refined and asked more questions until it helped me get my desired goal.

It was insanely handy!

So as you can see, examples act as a bridge between your expectations and the AI’s understanding. They help clarify your intent and set a benchmark for the AI’s output, making the final result more useful and aligned with your needs.

Tip #4. Iterate, Iterate, and Iterate Again!

Prompt engineering is rarely a one-and-done task. It’s actually more of an iterative process, where each round of prompting teaches you more about how to communicate effectively with AI.

Often, your first attempt won’t yield the perfect result, especially for complex tasks. But this is where the process becomes invaluable.

When the AI produces an output that isn’t quite right, it’s an opportunity to refine your approach. Perhaps the initial prompt was too broad, or maybe it lacked the necessary context. By analyzing what didn’t work, you can tweak your prompt - adding details, adjusting constraints, or rephrasing instructions - to guide the AI closer to your desired outcome.

This iterative process is not just about improving the current output but also about building your skill as a Prompt Engineer. Over time, you’ll develop a deeper understanding of how different wording or added details can dramatically enhance the AI’s performance.

Each refinement makes you more adept at crafting prompts that deliver precise, high-quality results, ultimately making you more efficient and effective in your work.

Prompt Engineering for Developers

Now that you know how to prompt, let's talk about what to prompt in order to help you write code more effectively and efficiently.

Well the world is your oyster really. There's a virtually limitless number of tasks an LLM can help you do more efficiently or effectively.

This of course doesn't mean it's writing all the code for you - not by a long shot, at least at this point - but sometimes it's helpful to have your own personal junior developer who can help with beginner or intermediate-level tasks and who you can talk through ideas or problems with.

So while the opportunities are endless, here's a quick-hit list of tasks that might inspire you to turn to AI next time you need assistance:

You can use AI to help write boilerplate code

Picture this: You’re starting a new project and need to generate boilerplate code. Normally, you may spend time writing out repetitive structures, right?

Personally, I always find it quite daunting to look at a blank code editor. There’s so much to do! Where do I start?

It’s much less daunting to look at a first draft or boilerplate. This first draft may be rough... like, really rough... but it’s still incredibly useful to have a draft to work off of. You can still slash and burn as much as you’d like, but you can keep and improve and build upon the sections you like as well.

Maybe the AI-generated draft even used some interesting code that you hadn’t thought about including.

You can use AI for debugging assistance

When you hit an error that leaves you scratching your head, AI can help pinpoint the issue and suggest fixes.

For example

Suppose you're getting a NullReferenceException in C#, but the cause isn't immediately obvious.

The AI can analyze your code and explain that you're attempting to access a property of an object that is null. It might suggest initializing the object before using it or adding a null check to prevent the exception.

This is where context is particularly important - you'll want to copy + paste your code into your prompt so that the LLM can assist with troubleshooting more effectively. By doing so, you're providing the LLM with the relevant context it needs: your buggy code.

You can use AI for automated refactoring

Refactoring code for readability and efficiency can be time-consuming, but an LLM can suggest improvements that align with best practices.

For example

If you have verbose conditional logic in your code, the LLM can recommend where to simplify it, identify unnecessary comparisons or redundant code, and provide cleaner, more efficient alternatives. This helps streamline your code, making it easier to read and maintain.

You can use AI for test generation

Writing test cases ensures your code works as intended but can be a chore. LLMs can generate test cases based on your existing code.

If you've written a function, the AI can provide multiple test cases covering different scenarios. This ensures your function is robust and handles various inputs, improving code reliability without extra effort on your part.

You can use AI for documentation and commenting

Keeping code well-documented is essential but... let's be honest shall we? It's often neglected. A great use of LLMs is to generate comments and documentation for your code.

For example

If you have a function lacking a docstring or comments, the LLM can create detailed explanations of the function's purpose, parameters, and return values. This makes your code more understandable for others and yourself in the future.

You can even specify the level of detail to be included in your comments by telling it to provide comments or documentation that provide sufficient detail for a total beginner to follow along.

You can use AI to help learn new languages or frameworks

This is one of the best use cases, in my view.

Tech is always evolving, and there's always new frameworks, libraries, or languages to learn (if you're like me, you have a long list of "Things I'll Learn Tomorrow" somewhere...).

LLMs can serve as a tutor of sorts, but what you might not appreciate is how customizable their teachings can become.

For example

If you already know JavaScript but want to learn Python, you can have the LLM teach you Python by explaining the similarities and differences each aspect has to JavaScript code.

Handy right!?

Sure you still need to learn via training programs and resources (AI work best when its supplementing or 'augmenting' you not replacing you), but still. It's super helpful to help you fast track it.

Heck, you can even train it to teach you different things.

For example

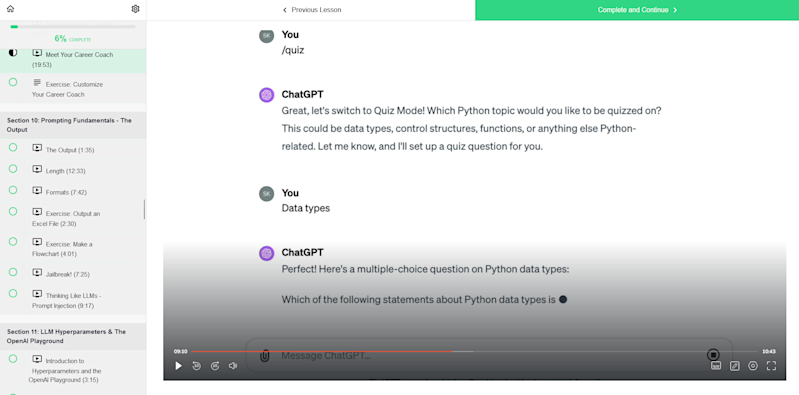

In my course I actually teach you how to create an AI Career Coach.

You can then use this to help you learn a new coding language or framework using the Feynman Technique. It even quizzes you, provides you with coding challenges and solutions, and even exports the information into a notebook you can use as a study or quick reference guide later on.

You can use AI to help with style and convention checks

Maintaining consistent coding style is important for team projects, and you can use LLMs to suggest adjustments to align with style guides.

For example

If your code deviates from standard conventions, the LLM can recommend changes to make it more idiomatic. This includes using language-specific features, following naming conventions, or simplifying expressions.

Adhering to conventions makes your code more professional and easier for others to understand, which is a big plus in the working world.

You can use AI to help with security enhancements

Security is crucial, and LLM can help spot vulnerabilities in your code that you might not have noticed. It's like a second set of eyes.

For example

If you're constructing database queries with user input, LLMs can warn you about risks like SQL injection and suggest safer alternatives, such as using prepared statements or parameterized queries.

This helps you write more secure code by highlighting risks and offering safer practices.

Final thoughts

So as you can see, Prompt Engineering has the potential to accelerate developers’ ability both to learn and write code. In fact, by learning to “program with natural language” alongside traditional training, it can have a compounding effect.

You not only learn to code, but you also get to practice using prompts to make your workflow more efficient (no more writing boilerplate code!).

For new devs this can be a game changer when joining the industry. Likewise, for more experienced devs it can make their day far easier. So it's a win:win!

P.S.

We’ve only scratched the surface here in this article and there’s so much more we can dive into.

Like any new tech you need to know the ins and outs, which is why I highly recommend you check out my Prompt Engineering Bootcamp course to understand this on a deeper level:

I guarantee that this is the most comprehensive, up-to-date, and best Prompt Engineering bootcamp course online, and includes everything you need to learn the skills needed to be in the top 10% of those using AI in the real world.

Better still, you can ask me questions directly in our private Discord community, and chat with fellow Prompt Engineers and students!

Best articles. Best resources. Only for ZTM subscribers.

If you enjoyed this post and want to get more like it in the future, subscribe below. By joining the ZTM community of over 100,000 developers you’ll receive Web Developer Monthly (the fastest growing monthly newsletter for developers) and other exclusive ZTM posts, opportunities and offers.

No spam ever, unsubscribe anytime