Have you ever wondered why some websites process sales in a flash while others hang? Or why certain reports take seconds to run, while others grind on for minutes?

It’s not just about the server or the size of the data (although those can make a difference).

The reality is that tech is all about trade-offs, and database design is no exception. If a system is built to be fast for users, it’s often slower for analysis, and vice versa. The same design choices that make it great at one job are also what make it struggle at another, which is why we use different types of databases for different goals.

In this guide, we’ll look at the two classic approaches to storing and processing data (OLTP and OLAP), why they behave so differently, and the design choices that give each its strengths and their trade-offs.

So that by the end, you’ll know exactly when to use each one and why.

Sidenote: If you want to take a deep dive into this topic and more, check out my complete Data Engineering course:

It’s the only course you need to learn Data Engineering end-to-end.

You’ll build real-time pipelines with Apache Kafka & Flink, data lakes on AWS, machine learning workflows with Spark, and integrate LLMs into production-ready systems.

This course is specifically designed to help you launch your career as a future-ready Data Engineer, but that’s not the only benefit. We live in a world where data is becoming more and more important. So picking up these skills can help you advance in almost any tech career!

With that out of the way, let’s get into this guide.

What is OLTP and what does it do?

OLTP or Online Transaction Processing databases, specialize in very fast, very small units of work. Think “insert this row” or “update that balance”.

Basically, OLTP is all about capturing and storing information the moment it happens.

For example

Let’s say that you’re trying to grab the last seat on a flight home for the holidays. You find a ticket, the price is a steal, and so you click ‘book now’.

An OLTP system (whether it's MySQL, PostgreSQL, MongoDB, or SQL Server) is what's working in the background and locking in your booking so that nobody else can take it.

It does this even if the system is juggling a constant stream of tiny, targeted updates in real time, from thousands of people at once.

Impressive right?

The thing is, though, that kind of responsiveness is no accident. In fact, OLTP databases are built from the ground up to process huge volumes of transactions without ever sacrificing accuracy or consistency.

So let’s look at how they pull it off, starting with the way OLTP databases store and structure data.

How OLTP systems store and structure their data

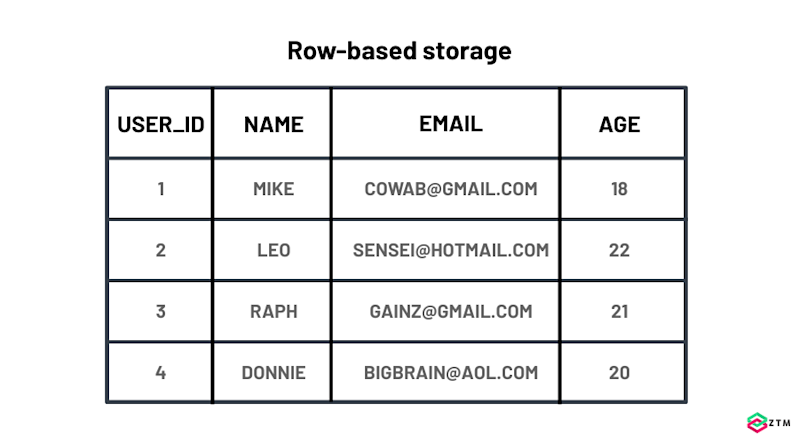

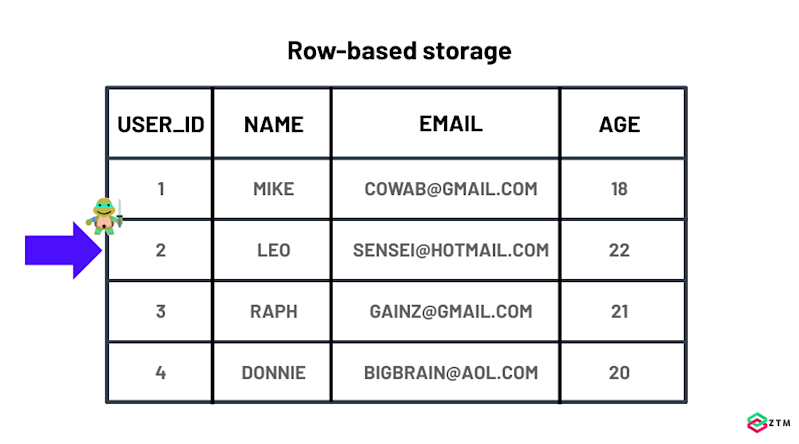

The reason OLTP systems can respond so quickly starts with how they organize their data. To achieve low latency when processing requests, OLTP databases use a so-called “row-based” storage, where all fields for a single record (shop item, user data, etc.) are stored together, one field after the other.

It’s kind of similar to a spreadsheet where each row holds all the details for one specific record, such as a single customer or order.

This makes sense since the queries these systems handle look like:

Get an order with this ID

Update the address of this user

Get the top 10 comments for a blog post

Etc.

Storing all fields together is what allows an OLTP database to quickly fetch all fields for a single record.

Conversely, when the database needs to update something, it can get that exact row and write new data with the minimal number of I/O operations. (The trade-off being that it can be slow for deeper analytical processing, which is why we use OLAP for analysis, but more on that later.)

How data is organized

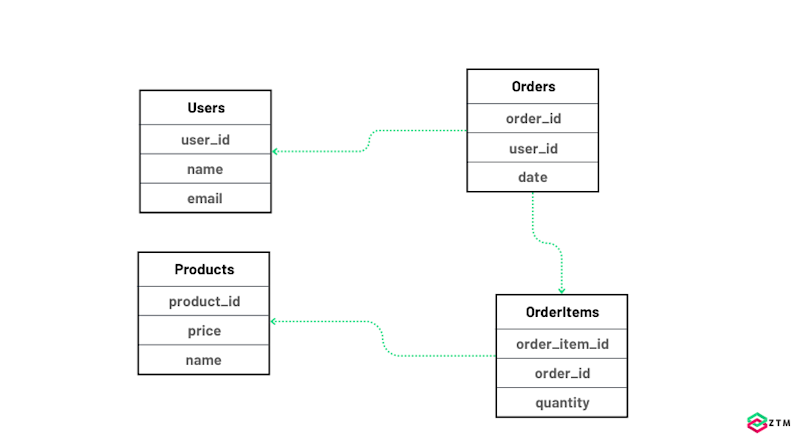

Data in an OLTP database is usually not stored in one table, simply because cramming all data in one table will create massive data redundancy among a myriad of other problems.

This is why most OLTP databases use a normalized schema, which means they store each piece of information only once and then connect it to other data by using IDs.

This design helps keep data clean and consistent, and keeps the amount of data touched by each read/write operation small, making them very fast. (A caveat is that sometimes it can get in the way of read performance, since one operation has to read multiple tables, so denormalization is a common optimization technique.)

For example

Instead of storing a customer’s name and email in every order, the system puts that information in a single Users table. Every time an order is placed, the Orders table just references the customer’s ID.

This means that if the customer changes their email, you only have to update it in one place, and all related orders instantly have the correct information.

Handy right?

However, even with data neatly organized into separate tables, the system still needs a way to jump directly to the right row without going through every record in the entire table.

This is where indexes come in, which are the second pillar of OLTP performance.

How OLTP uses indexes

The best way to think of these is kind of like an index at the back of a book. They list out all the the topics and allow you to quickly find which pages in the book mention a certain word, so you don’t have to flip through the whole book.

Well, database indexes work in the same way. A database index allows a database to pinpoint the location of a record on a disk. So instead of scrolling through hundreds of rows to find a customer by their ID, the index tells the database exactly where that row is.

This means it can jump straight to the right spot and fetch the data almost instantly.

For example

If you search for a customer by their ID, the database uses the index to find that exact record.

Without it, the system would have to read every row in the table until it found a match, which would be much slower.

Smart, right?

To speed it up even further, most systems will keep indexes on the fields that are used most often for lookups, such as account numbers, product SKUs, or booking references. This way, it can jump to them quickly, and it’s one of the key reasons they can handle thousands of simultaneous queries without slowing down.

That being said, though, speed alone is not enough.

We also need to make sure that every transaction is accurate and consistent, even when hundreds of changes happen at the exact same moment, and that’s where data consistency comes in.

How OLTP keeps your data accurate and up-to-date

When several people try to change the same information at once, an OLTP system makes sure the data remains consistent and correct.

For example

Imagine again that we’re booking a plane ticket for the holidays. And because it's a peak travel period, there might be a few people all looking to book that exact same seat.

Now lets imagine that someone else has just beat you to booking, but their payment failed. In this instance, the whole action is cancelled, and the seat shows as available again which is what you want if you're the airline and you want to sell all your seats.

To achieve this, most OLTP systems follow ACID principles, which are the rules that keep transactions reliable:

Atomicity means that a transaction is all or nothing. As another example, if you transfer money from your account to someone else’s, the system must either take the money out of your account and put it into theirs or do nothing at all. There’s no situation where the money disappears or is duplicated

Consistency guarantees that every committed transaction leaves the database in a state that satisfies all defined rules. One example of consistency is “referential integrity,” which is simply the rule that whenever one record points to another, the record it points to must actually exist, so the database never contains broken or missing links between related data

Isolation makes sure that transactions don’t interfere with each other while running. If you’re booking a hotel room and someone else is booking the same one at the same moment, you won’t see half-finished updates that could cause confusion or double-booking. (although some databases have various “levels” of isolation, which can produce odd effects)

Durability means that once a transaction is confirmed, it’s stored permanently. Even if the power goes out or the server crashes right after you click “book,” your reservation is still there when the system comes back online

(A small caveat is that some OLTP systems, most notably NoSQL databases, may relax these properties to achieve higher performance).

OLTP Trade-offs

OLTP engines are Formula 1 cars in that they're unmatched at rapid, tightly controlled laps (single-row reads and writes), but not built for long-haul freight.

In fact, the very features that make them perfect for high-speed transactions such as row-oriented storage, heavy indexing, strict normalization, all turn into sandbags when you ask heavyweight questions like:

“Show total revenue by product category for the past 12 months”

“Find the average session length per user segment, broken out by week”

To answer queries like these, the database has to pull millions of rows from many tables, join them, and scan columns it normally ignores. Even with good indexes, these queries are I/O-hungry and can slow down your application trying to serve users.

Obviously we need a better option, because in today’s data-driven industries, companies don't just want to know that a single order went through. They also want to analyze it so they can see sales trends over the past year, spot their best-selling products, or find out which customers are most active.

That’s why we also use OLAP (online analytical processing) systems.

What is OLAP and what does it do?

OLAP data stores are designed from the ground up for large-scale analysis, able to scan millions or even billions of rows, summarizing and combining information to reveal patterns. Some examples of OLAP datastores include Snowflake, Google BigQuery, Amazon Redshift, and many others.

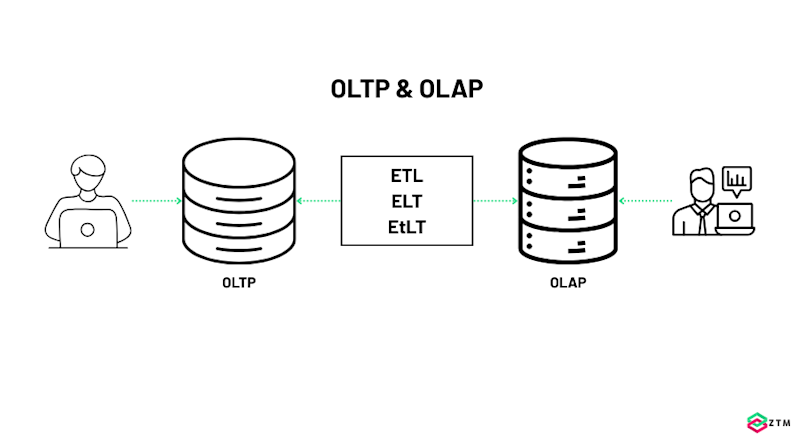

These work by sitting downstream from your OLTP database. Data is copied from an OLTP database to an OLAP database, and there are a few different ways of doing it.

Extract, Transform, Load - the data is first cleaned and reshaped into analysis-ready tables before it is written to the OLAP store

Extract, Load, Transform - when the data is copied as is from an OLTP databases, and only transformed for analytical purposes later

Extract, transform, Load, Transform - This is a hybrid pattern where you apply a quick, light transform (the lowercase “t”) on the way out of the source system to fix types, then load the data. The heavier, business-logic-driven Transform (the capital “T”) happens after the data is safely inside the OLAP datastore

A common approach is to copy data periodically in large batches from an OLTP database into an OLAP datastore, but modern OLAP implementations also support ingestion almost in real time.

That separation keeps OLTP fast for everyday operations while giving OLAP its own copy to reorganize and prepare for heavy analytical work without slowing anything down.

How?

Well, they use a few techniques, starting with how they structure data.

How OLAP systems store and structure their data

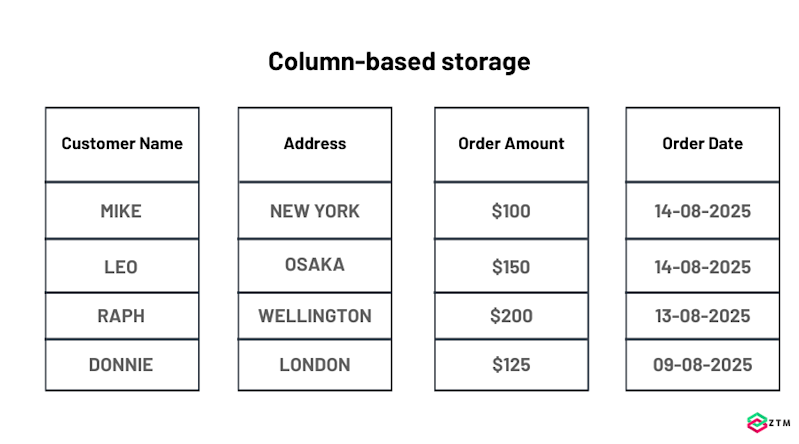

As I mentioned earlier, most OLTP databases store data in a row-based format. Think of it like one huge spreadsheet containing all your data. On that spreadsheet, each row is a complete record: customer name, address, order amount, date, and so on.

This works great for transactions because you usually want all of that information at once. However, when you’re doing analysis, you are usually interested in a few columns (e.g., get total revenue per user) and not whole rows.

For example

Let’s say you want to calculate total sales by month. In a row-based system, the database still has to scan through every single row, even though you only care about two pieces of information: the “sale amount” and the “sale date.”

That’s a lot of data to read, just to ignore it.

OLAP flips this on its head. Instead of storing full rows together, it stores all the values from each column together.

Imagine splitting that big spreadsheet into separate, smaller spreadsheets:

One for customer names

One for addresses

One for sale amounts

One for dates

For a human, this might be harder to read and not make as much sense. But for a computer, it makes it much easier and faster to work with. If you want total sales by month, your system only has to open the “sale amount” and “sale date” sheets and completely skip the rest.

This has two major advantages.:

First, there’s far less data to read. Analytical queries usually focus on a small subset of queries, and the system can jump straight to the exact columns it needs and ignore everything else

Second, values in a single column tend to repeat (like dates or product categories), which makes them easy to compress. That saves space and speeds up scanning even more

Doesn't seem like much of a difference, but columnar storage is a big part of why OLAP can fly through millions or even billions of rows when running analytical queries.

Another part of that speed comes down to how that data is organized.

How OLAP organizes data

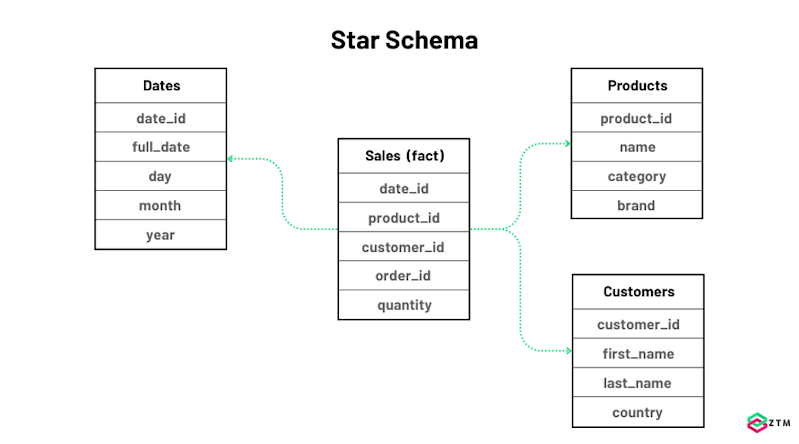

As I mentioned earlier, OLTP databases usually use a normalized schema that connects multiple small related tables. This avoids duplication and can be great for transactions, but it can be a headache for analysis because of the way this design works.

Why?

Well, the best way to imagine OLTP data organization is like a subway train.

You're standing at platform A and you want to get to station D to pick up a file from someone. However, to get there, you first have to stop at A, then B, then C, before finally arriving at D. This means that every query becomes a slow, winding route through multiple tables.

As you can imagine, this is fairly long-winded and one of the reasons why we don't use OLTP for analysis.

OLAP uses a set of completely different approaches to structuring data. One of the most popular is called a star schema, which organizes data around a central fact table, which is surrounded by dimension tables, each holding descriptive details (customers, products, dates, etc).

The fact table represents business events (like orders), while dimension tables provide descriptive attributes.

If we go back to the train analogy again, this is more like starting at grand central station and then catching a train directly to station D with no unnecessary stops or reroutes. Then, once you have the file, you jump back on the same train and are at the hub nice and fast.

Handy, huh?

This star schema layout makes finding things quick, and the column-based storage makes reading them even quicker.

For example

A retailer might collect all the day’s sales from hundreds of stores and load them into the warehouse at midnight. Then the OLAP system can transform and clean that data and store it in a format ready for analysis.

This data batching has numerous benefits:

It takes pressure off operational systems

It ensures everyone is working from a consistent snapshot

It allows time for deduplication, cleaning, and reorganizing before analysis

However, it does come with a tradeoff in certain situations.

For example

Let’s imagine that you want to run a real-time dashboard or track for fraud prediction. Well, in this situation, you can’t be waiting an hour or even 24 hours for that to come through. You need to know about it right away!

This is why you can also get real-time analytics tools that fit between OLTP and OLAP systems so that you can get both historical insight and low latency. It's a bigger topic and worth diving into, so I'll cover it more in a future guide. But for now, it's just worth being aware of this tradeoff.

It’s not about OLTP vs OLAP

So as you can see, OLTP and OLAP aren’t in competition, because they’re two halves of the same system:

OLTP keeps your day-to-day running without a hitch

While OLAP turns that activity into insights, you can act on

Together, they give you speed where it counts and depth when it matters.

However, if you want those insights as they happen, you’ll need to step into real-time analytics, which is exactly what I’ll cover in my next guide. 😉.

P.S.

And remember, if you want to take a deep dive into this topic and much more, check out my complete Data Engineering course:

It’s the only course you need to learn Data Engineering end-to-end.

You’ll build real-time pipelines with Apache Kafka & Flink, data lakes on AWS, machine learning workflows with Spark, and integrate LLMs into production-ready systems. This course is specifically designed to help you launch your career as a future-ready Data Engineer, but that’s not the only benefit! We live in a world where data is becoming more and more important, so picking up these skills can help you advance in almost any tech career.

And as an added bonus?

Once you join, you’ll have access to our private Discord community, where you can ask questions to me, as well as other students and other tech professionals.

Best articles. Best resources. Only for ZTM subscribers.

If you enjoyed this post and want to get more like it in the future, subscribe below. By joining the ZTM community of over 100,000 developers you’ll receive Web Developer Monthly (the fastest growing monthly newsletter for developers) and other exclusive ZTM posts, opportunities and offers.

No spam ever, unsubscribe anytime